Research Seminar: A brief introduction to Recurrent Neural Networks, Gated Recurrent Units and Long-Short Term Memory

This event has taken place

View all upcoming events at Kingston University.

Time: 2.00pm - 3.00pm

Venue: PRJG2010, Penrhyn Road campus, Penrhyn Road, Kingston upon Thames, Surrey KT1 2EE

Price:

free

Speaker(s): Demetris Lappas, PhD Student (Supervisor: Prof Dimitrios Makris)

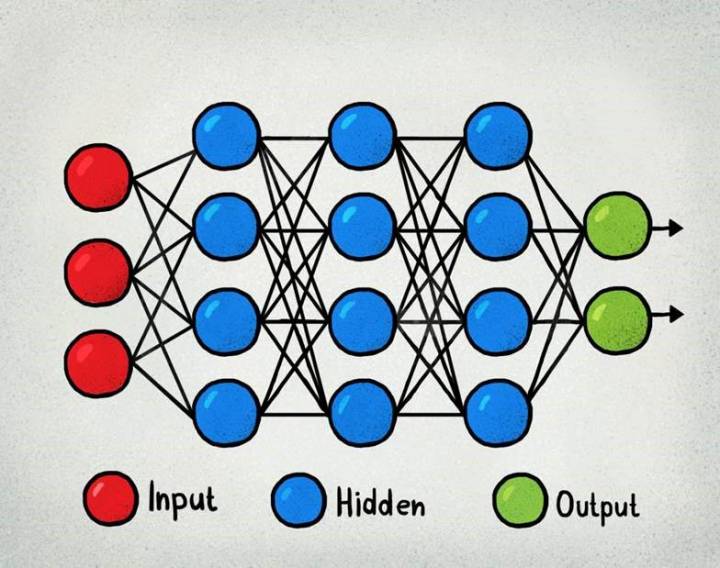

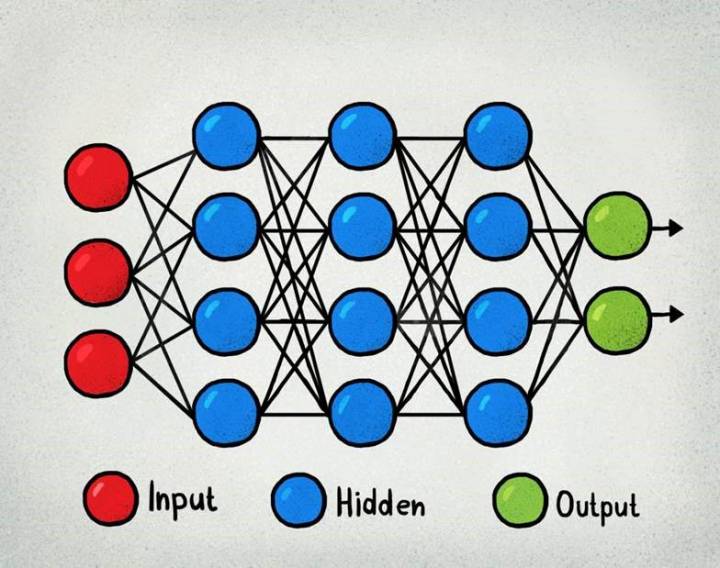

Recurrent Neural Networks (RNNs) are at the forefront of some of the most modern and sophisticated models in AI. They have demonstrated incredible performance within music generation, DNA sequencing, speech recognition, translation and many other aspects of natural language processing. Unlike normal Deep Neural Networks, RNNs are spectacular for classifying sequential data. However, like very Deep Neural Networks, Deep RNNs suffer from the vanishing gradient problem. In this session we will briefly discuss some of the uses of RNNs, how they work, why they are different from Deep Neural Networks and why they suffer from the vanishing gradient problem. We will then delve into Gated Recurrent Units (GRUs) and Long-Short Term Memory (LSTM); we will discussing their structures, the equations behind each of the gates and how they have been used to solve the vanishing gradient problem.

For further information about this event:

Contact: Nabajeet Barman

Email: SECevents@kingston.ac.uk

Directions

Directions to PRJG2010, Penrhyn Road campus, Penrhyn Road, Kingston upon Thames, Surrey KT1 2EE: